The Central Government has adopted a strict stance on misinformation spread through AI-generated content, introducing amendments to the IT Rules 2021. From 20 February 2026, all social media platforms will be required to label AI-generated content, and remove deepfake photos and videos within three hours upon direction from the government or a court. Platforms must also implement tools to detect and prevent malicious or fraudulent AI content.

Three Major Changes to Come into Effect

- Mandatory AI Labeling

All social media platforms such as YouTube, Twitter, and Facebook must clearly indicate which content is AI-generated. Once an AI label is applied to a photo, video, or audio, it cannot be removed or hidden. This ensures transparency and prevents users from being misled. - Tools to Block Malicious AI Content

Platforms are instructed to develop and deploy tools capable of detecting offensive, misleading, or fraudulent AI-generated content before it reaches users. This includes deepfake videos, doctored images, and manipulated audio. - Quarterly User Warnings

Social media companies must notify their users every three months about the potential legal consequences of misusing AI technology. This measure aims to educate users and deter malicious activity.

Clear Identification of AI Content

The new labeling rules will make it easy for users to distinguish between original and AI-generated content. For example, manipulated videos often circulate on social media showing impossible scenarios, such as an animal being “returned” to a location after an attack. Many viewers cannot tell the difference, but AI labels will now clarify the origin of such content.

No Room for Loopholes

Under the updated rules, AI labels must cover at least 10% of the visual area of images or videos, and AI-generated audio must be audibly identified. Platforms cannot hide or minimize these labels. They are also required to implement pre-upload detection systems to flag AI content before it is shared online.

Protecting Users from Misinformation

The government expects these measures to empower users, prevent misleading information, and curb the misuse of AI on social media. Officials believe these rules will make the internet more reliable and trustworthy for all users.

The draft of these amendments was released on 22 October 2025 by the Ministry of Electronics and Information Technology (MeitY), and the rules will be officially enforced from 20 February 2026.

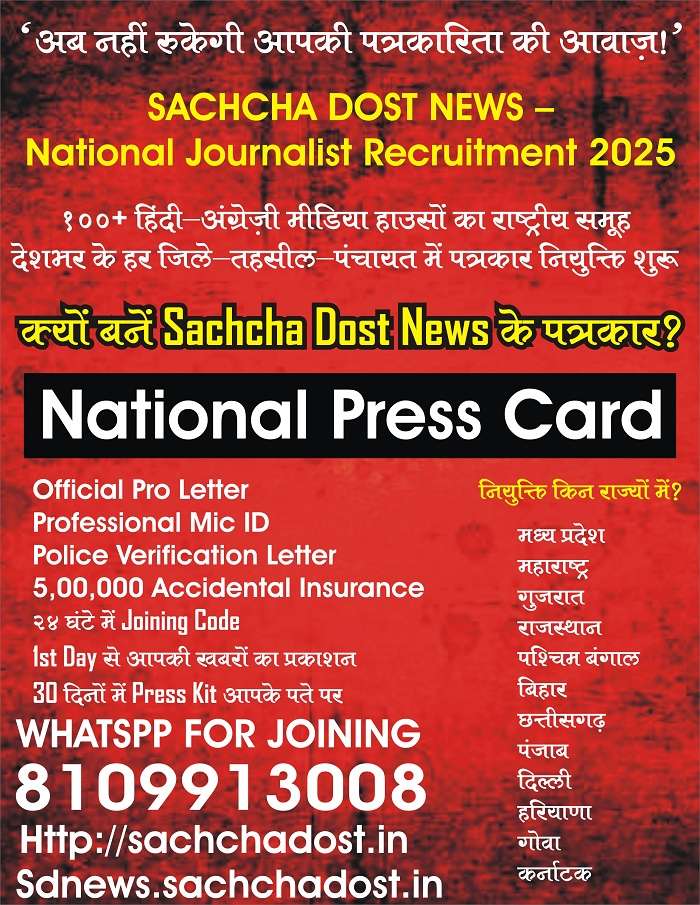

Discover more from SD NEWS agency

Subscribe to get the latest posts sent to your email.